This post serves as a tutorial for building machine learning models in Python. The entire codebase for this tutorial can be found on Github. After cloning the repo, you can run the code in a virtual environment, if desired, as so:

$ python3 -m venv venv

$ source venv/bin/activate

$ pip3 install -r requirements.txt

$ python3 main.py

$ deactivate

Likewise, you can run the code via Docker as so:

$ docker build -t cy_young . && docker run cy_young main.py

The Problem

Our goal is to predict how many points a pitcher will get in the Cy Young vote. Each observation represents the number of votes a pitcher received in a given year (pitcher + year = row). If a pitcher did not receive any votes in a given year, they are not included in the dataset.

The data is sourced from the Lahman database. The Cy Young voting data is available from 1956-2016. Common pitching stats such as ERA, wins, losses, and strikeouts are used to predict the number of points won in the voting. For full features, refer to data/pitching_stats.csv in the repository.

Root Directory

In the root, we have two Python files: constants.py and main.py. The former defines global constant variables, and the latter is the entry point of our code. We will review both in turn.

Below is the full code for constants.py. As you can see, we define several constant variables we are likely to reference multiple times, such as directories and the name of our target. We also define a dictionary that dictates what models we will train: scikit-learn’s gradient boosting, XGBoost, CatBoost, and LightGBM. As you might infer, all are variants of gradient boosted trees. The key of our dictionary is the model’s name, and the value is a list containing the callable model function, the hyperparameter search space, and the number of iterations to run when tuning the model’s parameters. To note, we elect for a smaller number of iterations when training CatBoost as this model, at least on my machine, took considerably longer than the others. We also reduce the number of trees in CatBoost from 1,000 to 250 to speed up training, which means we must tune the learning rate parameter to compensate. The search spaces we define are distributions. When we train our models, we use a Bayesian optimization routine to select promising areas of the distribution to explore, based on past optimization results.

main.py is a simple script that executes our code. In this repository, main.py is the only script that runs any code, which is a common convention, though not one that can always be followed. I am a believer in short and straightforward execution scripts when possible. Most of our code is written in custom modules (e.g. modeling/modeling.py) and imported into main.py. Therefore, we can read the function calls in our execution script and easily have a solid idea of what is happening. To note, the @timer decorator on the main function will inform us how long our process takes to run.

Data Directory

In our data directory, we have a file called data.py, which includes a function to ingest and clean our csv files. This becomes our data for modeling.

Helpers Directory

In our helpers directory, we have a file titled helpers.py. This script is meant to house functions for various “helper” processes, such as programmatically creating directories, producing plots, and cleaning data. Each function has a docstring to fully explain its functionality, and all functions are pretty straightforward. I will call out the FeaturesToDict class. By using inheritance from scikit-learn (BaseEstimator and TransformerMixin), we can create a custom transformer for use in our scikit-learn pipeline (more on this detail in the next section).

Modeling Directory

Thus far, we have not delved too deep into our code. However, our modeling code has some important nuances that we will review closely. Within the modeling directory, all of our code is housed in modeling.py.

First, we need the following import statements.

Our first function creates a custom train-test split. Oftentimes, we take a random 70-80% of observations for training and use the remaining 20-30% for testing. For this specific modeling task, such an approach could be problematic. For example, Randy Johnson appears in our dataset 10 times. If we did a random split, eight observations might end up in the training set and two in the testing set. Our model might learn a latent individual-level effect for Randy Johnson based on his distinct statistics. Therefore, it would be able to better predict those two observations in the testing set. In this case, we would have leaked information from our training set into our testing set, and our holdout performance would no longer be a reliable bellwether for how the model will generalize (i.e. we have introduced bias). Therefore, we assign a percentage of randomly-chosen pitchers to be in the testing set and ensure they will not exist in the training set.

You might be thinking, “when using cross validation, won’t we run the same risk of individuals being in both our training and testing sets?” Yes, we will! Therefore, we need to define a function to produce custom cross validation splits.

We next define our scikit-learn pipeline, an incredibly valuable and useful tool. The Pipeline class from scikit-learn allows us to chain transformers to prepare our data. This approach has multiple benefits, chief among them are 1) prevention of feature leakage and 2) easier management of preprocessing functions. Feature leakage occurs when we use information from our testing data to inform decisions about our training data. For instance, when we scale features using all of our training data, if we later use k-fold cross validation, we will have feature leakage. That is, the data we used for testing in the cross validation fold was previously employed for scaling the data. The scikit-learn pipeline prevents this issue by fitting the transformations (e.g. scaling the data) on only the training folds during cross validation. Likewise, we can serialize our entire pipeline, which includes all of our preprocessing in this case. This means that, after we read our serialized pipeline from disk, we can run our model through the pipeline to apply all of the requisite preprocessing and obtain predictions in one fell swoop. We don’t have to call other preprocessing functions. This is quite handy.

In our pipeline, we define different preprocessing steps for numeric and categorical features. We accomplish this aim via scikit-learn’s ColumnTransformer. For our numeric features, we impute nulls with the mean and scale the data. For categorical features, we impute nulls with a constant string and use scikit-learn’s DictVectorizer to handle one-hot encoding. The DictVectorizer expects our data in dictionary format, hence we use our custom FeaturesToDict class from helpers/helpers.py. This approach for handling categorical features has a major benefit over something like the pandas’ get_dummies method. Namely, if the model in production encounters a category level it has never seen before, it will not error out. Pandas’ get_dummies() requires some specific preprocessing to prevent errors when encountering new categories, which adds needless complexity.

Additionally, all features are subject to a function to drop certain features. Likewise, scikit-learn’s SelectPercentile is leveraged to weed out features that are detriments to model performance.

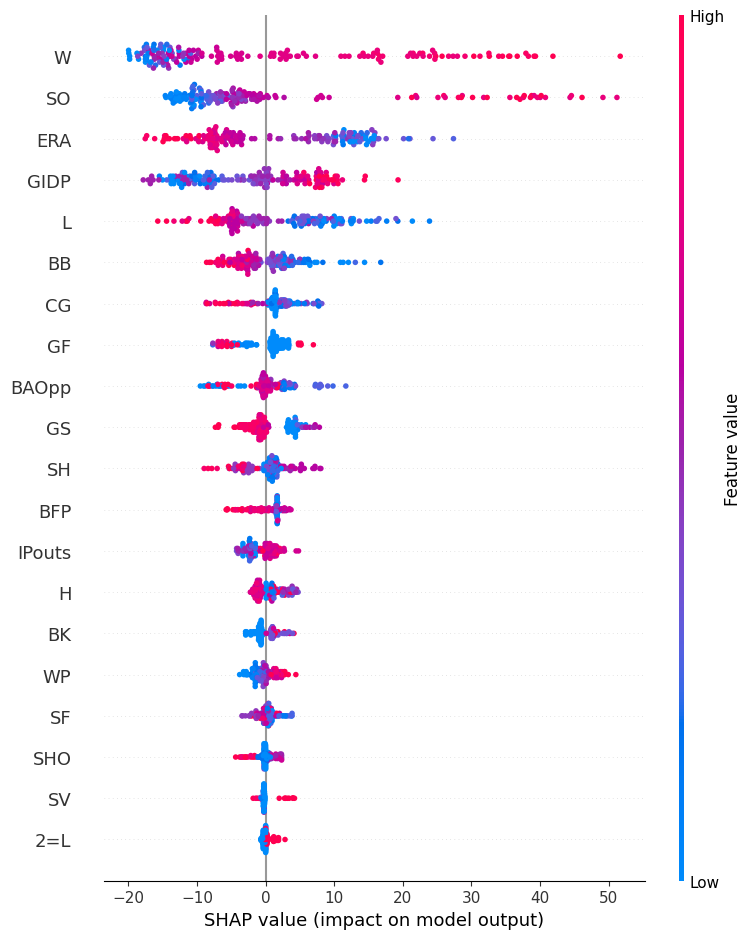

In modeling.py, we also define a function to produce SHAP values, which show features’ contributions to predictions. If you are interested in learning more about SHAP values, I recommend this article as a starting place.

Getting the SHAP values when using an even somewhat complicated pipeline is a bit of a pain. Concretely, we have to isolate the feature names from within our pipeline and preprocess our data using the relevant steps of the pipeline, which is shown in the code below.

Lastly, we come to our function to actually train our model. As you might recall from main.py, the below function is called for each item in MODEL_TRAINING_DICT defined in constants.py. Using such a dictionary allows us to easily iterate over different models. While we won’t review every line in our model training function, we will review a few key items.

First, the function argument “get_pipeline_function” expects a callable so that we can produce a scikit-learn pipeline. In main.py, you can see that we pass our construct_pipeline function into this argument, which was described previously. This approach enables us to insert different models into the same pipeline template and not repeat code needlessly.

Second, we use Hyperopt to tune our model. This approach is a paradigm shift from both grid search and randomized search, which are brute-force methodologies. Hyperopt is a Bayesian optimization library, meaning we will explore promising hyperparameters based on past performance. For a solid initial primer on Hyperopt, I recommend this article.

The _model_objective() function represents the objective we want to minimize (Hyperopt can only minimize functions). In essence, we want to minimize 1 – the average negative mean squared error, which is admittedly a bit confusing. To calculate cross validation scores to obtain our average score, we use scikit-learn’s cross_val_score(), which accepts negative mean squared error and not simply mean squared error. This is because scikit-learn makes a practice of having higher scores always be preferable in cross validation routines. Let’s work through an example of why we are optimizing the metric in such a way.

Let’s say we have two average mean squared error scores: 10 and 5. Since we prefer smaller values for this metric, we select the model that produced the score of 5. If we make the score negative, the value becomes -5 and is the maximum of the two values.

In our objective function, we take 1 minus the average negative mean squared error. If we do that for both scores stated previously, which were turned negative, we get 11 and 6. The score of 6 represents our best performance (originally a raw mean squared error of 5), and the model that produced it will be selected.

Our Hyperopt framework will minimize our objective function, which we can see chooses the model with the best mean squared error (the lowest value).

Third, our code has some error handling when trying to produce SHAP values. The current version of XGBoost does not play well with the shap library.

Model Results

Without much feature engineering, we get the following results on our testing set. LightGBM is our best performer.

| Model | MSE | RMSE | MAE |

| scikit-learn gradient boosting | 1352 | 37 | 27 |

| XGBoost | 1189 | 35 | 26 |

| LightGBM | 1043 | 32 | 24 |

| CatBoost | 1158 | 34 | 26 |

Considering that our target, points won, can range from 0 to about 250, our model isn’t great. However, some of the big “swings and misses” are believable. As one example, in 1956 Whitey Ford went 19-6 with a 2.47 ERA. The model thought he would get 21 points, but he only got 1. In our testing set, there are a number of similar cases.

The below is a plot of LightGBM’s SHAP values for the testing data. A discussion of a few features should hopefully make the interpretation clear. Let’s take wins (W) for example. A higher number of wins (red dots) oftentimes drives up points won. This makes sense. Likewise, a lower ERA (blue dots) tends to increase points won. Again, this makes sense. Our model is making sensible choices.

Conclusion

Our goal was not necessarily to build the best possible model. To do so, we would need to try more models and engineer additional features. Rather, we wanted to lay out a framework for training models. Hopefully, you find it useful and inspiring for your own work.