As spring training approaches, the excitement for a new season becomes fresh. Fans and commentators alike discuss teams’ prospects, and multiple organizations release player projections. Trying to understand what may happen is thrilling. In that vein, this blog sets out to find a machine learning model to predict a hitter’s OPS for the upcoming season.

Data used in OPS Modeling

The target, the item we’re trying to predict, is a player’s OPS for the next season. OPS combines on base percentage, how often a player gets on base, with slugging percentage, how many bases per at bat a player obtains. The features used to predict the target include the following:

- Offensive statistics from the previous 5 seasons

- Postseason offensive statistics from the previous season

- The previous season’s team, primary position, and awards

Models were trained on data from 2000 – 2016. I used the Lahman database as my data source, and at the time of publication, 2017 data had not been made available.

No information from the same season is used to 1) prevent feature leakage and 2) ensure the model is generally applicable. On the second point, we may not always know what team a player will play for this season (cc: Eric Hosmer), but we will always know what team they played for last season.

Machine Learning Modeling Results

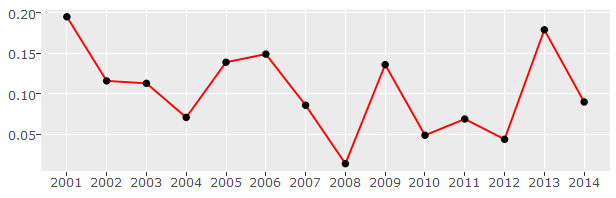

I trained a number of regression models using both scikit-learn and Keras Python libraries. The best model was the lasso regression, with a root mean square error (RMSE) of 0.170, which is a bit lackluster. A RSME of 0.170 means our estimates for OPS tend to be incorrect by that same factor (e.g. the real OPS was 0.800, but our model predicted 0.970). There are certainly a number of observations where the prediction is close to the actual, but other cases where the model misses badly, driving up the RMSE.

Why does the model struggle? Likely because a global approach may not be best. Players can be vastly different, and building separate models for different segments of players could yield better results. Anecdotally, the model struggles with high-OPS players; it’s too conservative. Future work could involve steps to better correct for this issue.

Interactive App using the Best Model

The interactive app below allows you to explore the predicted vs actual OPS numbers for selected players. Hover over the data points! (If the app doesn’t load, please refresh the page).